PaLM 2 (Pathways Language Model 2) is a large language model (LLM) developed by Google AI. It is a successor to PaLM, which was announced in April 2022. PaLM 2 is trained on a massive dataset of text and code, and it can generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way. In this blog we will see ‘Introducing Google Large Language Model PaLM 2’

Introduction

PaLM 2 is a revolutionary large language model developed by Google. Unlike any other model built before, it excels in math, code, advanced reasoning, and multilingual tasks like translation. The secret to its success lies in its unique training process, which includes scientific and mathematical data as well as over 100 spoken word languages and more than 20 programming languages.

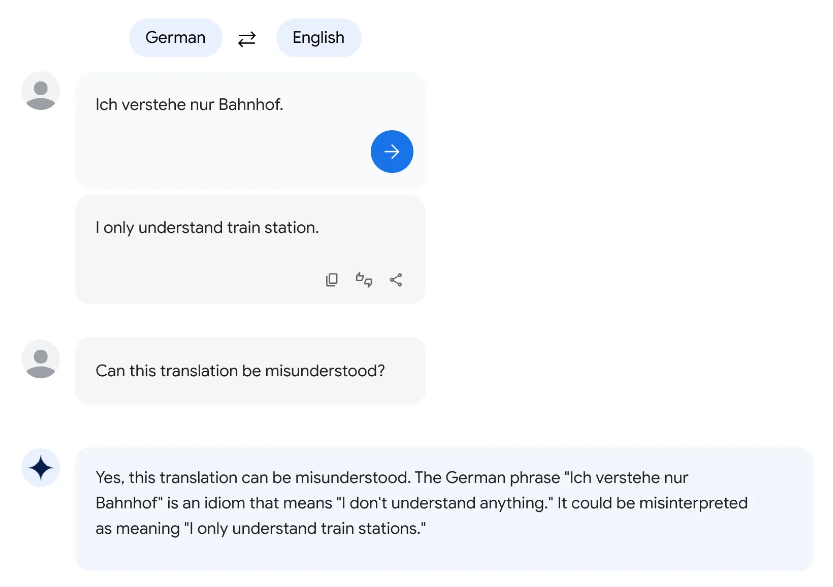

PaLM 2 is already being used to power several Google products such as Bard, Google Workspace, and the Palm API, and you may already be using it without realizing it. It can even translate programming languages, making it a valuable tool for collaboration between colleagues who may speak different languages. One of PaLM 2’s most impressive features is its ability to generate and understand nuanced language, including idioms and riddles. This requires not only an understanding of the figurative meaning of words but also the literal intent behind them.

Google has been a leader in AI for years, and many of its advancements, including the transformer architecture, Tensor Processing Units (TPUs), and open-source frameworks like TensorFlow and Jax, have become staples in the industry. PaLM 2 was built using compute-optimal scaling, which means that its computing power scales proportionally to the size of the dataset. This approach not only makes PaLM 2 more efficient but also more environmentally friendly.

Google is committed to releasing safe and ethical tools to the public, and PaLM 2 is no exception. It has been trained to avoid and de-escalate aggressive and toxic prompts and to steer conversations in a more positive direction.

Google is also using PaLM 2 to advance research in healthcare, cybersecurity, and other fields. As we move toward multimodal models, the capabilities of PaLM 2 and other models will become even more compelling, allowing them to handle text, code, audio, video, and images.

Pichai announced that PaLM 2 will come in four different model sizes: Gecko, Otter, Bison, and Unicorn.

What PaLM 2 can do

PaLM 2 is a state-of-the-art language model with improved multilingual, reasoning and coding capabilities.

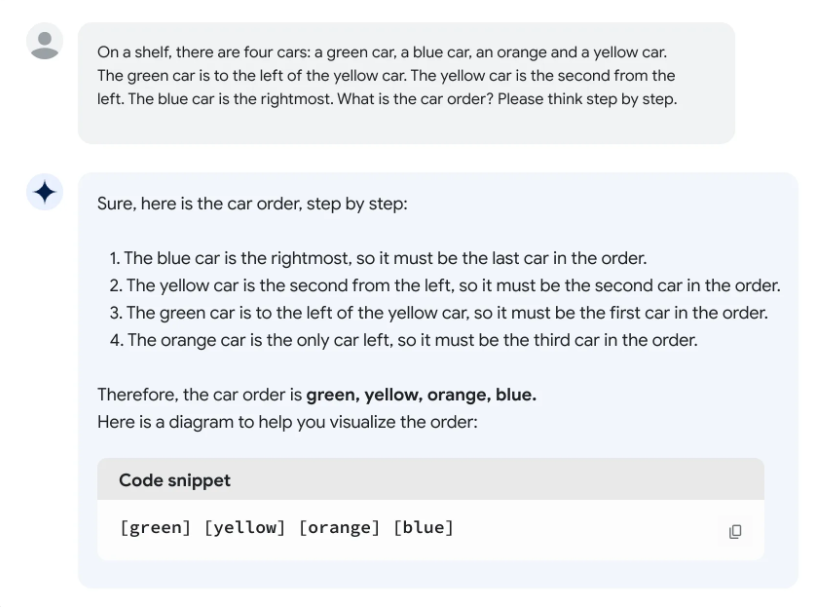

Reasoning:

PaLM 2 is an advanced language model that has shown improved capabilities in logic, common sense reasoning, and mathematics due to its wide-ranging dataset that includes scientific papers and web pages with mathematical expressions. It can break down complex tasks into simpler subtasks and has a better understanding of the nuances of human language compared to previous LLMs like PaLM. This includes excelling at understanding riddles and idioms, which require comprehending the ambiguous and figurative meanings of words rather than their literal meanings.

Multilingual translation:

PaLM 2 has been trained on a large corpus of multilingual text in over 100 languages, which has greatly enhanced its ability to comprehend, generate and translate complex text such as idioms, poems and riddles in a wide range of languages. It can even pass advanced language proficiency exams at the “mastery” level. Compared to its predecessor, PaLM, PaLM 2 was pre-trained on parallel multilingual text and a much larger corpus of different languages, making it excel at multilingual tasks.

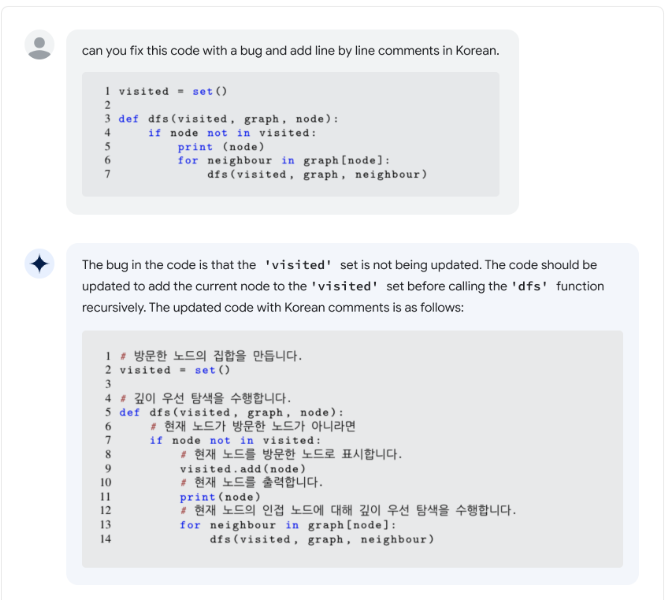

Coding:

PaLM 2 has been pre-trained on a vast amount of data from various sources, including webpages and source code. As a result, it is highly skilled in generating code for popular programming languages such as Python and JavaScript. Additionally, it can produce specialized code for languages like Prolog, Fortran, and Verilog. Its language capabilities can also facilitate collaboration among teams working in different languages.

Building PaLM 2

PaLM 2 is an advanced language model that excels at tasks such as reasoning, translation, and code generation. It improves upon its predecessor, PaLM, by incorporating three key research advancements:

Use of compute-optimal scaling: Compute-optimal scaling is a technique that involves scaling the model size and training dataset size in proportion to each other. This technique makes PaLM 2 smaller than PaLM, but more efficient with better overall performance. This includes faster inference, fewer parameters to serve, and a lower serving cost.

Improved dataset mixture: PaLM 2 improves upon its predecessor by utilizing a more diverse and multilingual pre-training dataset. This new mixture includes a wide range of content such as web pages, scientific papers, mathematical equations, and hundreds of human and programming languages.

Updated model architecture and objective: PaLM 2 has an improved architecture and was trained on a variety of different tasks, all of which helps PaLM 2 learn different aspects of language.

Evaluating PaLM 2

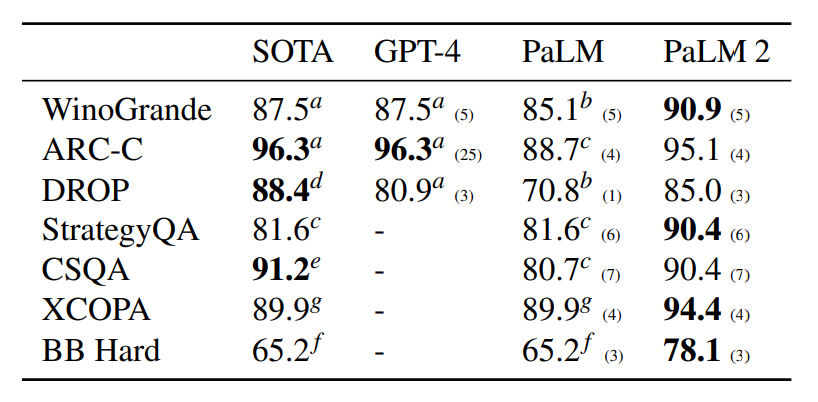

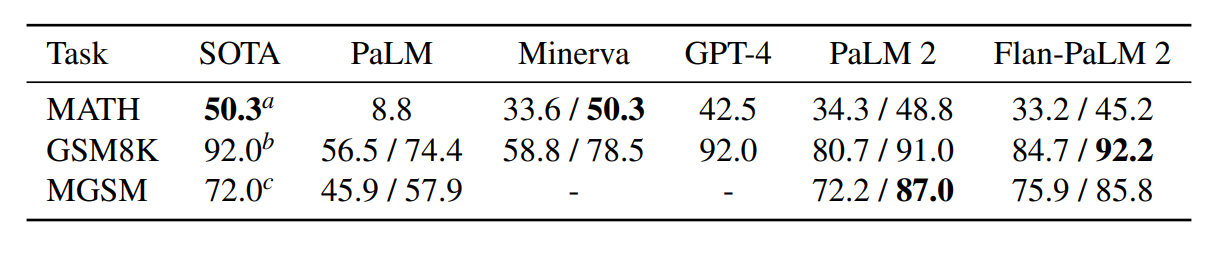

PaLM 2 outperforms its predecessor, PaLM, in multilingual capabilities and achieves better results on benchmarks such as XSum, WikiLingua and XLSum. It also surpasses PaLM and Google Translate in translation ability for languages like Portuguese and Chinese. Additionally, PaLM 2 sets a new standard on reasoning benchmark tasks like WinoGrande and BigBench-Hard. PaLM 2 continues our responsible AI development and commitment to safety.

Pre-training Data: Google take steps to protect sensitive personal information by removing identifiable details. We also reduce the risk of memorization by filtering out duplicate documents and share analysis of how people are represented in pre-training data.

New Capabilities: PaLM 2 has shown enhanced abilities in classifying toxicity across multiple languages and also includes features to prevent the generation of toxic content.

Evaluations: We assess the potential for harm and bias in PaLM 2 across various applications, including dialogue, classification, translation, and question answering. This involves creating new evaluations to measure potential harm in generative question-answering and dialogue settings related to toxic language and social bias associated with identity terms.

Powering over 25 Google products and features

Google unveiled more than 25 new products and features that are powered by PaLM 2. This means that the latest advancements in AI technology are being integrated directly into google products and made available to people worldwide, including consumers, developers, and businesses of all sizes. Here are some examples:

- Starting today, we are expanding Bard to new languages thanks to the improved multilingual capabilities of PaLM 2.

- PaLM 2’s capabilities are being utilized by workspace features in Gmail, Google Docs, and Google Sheets to enhance writing and organization. This results in faster and more efficient work completion.

- Our health research teams have trained Med-PaLM 2 with medical knowledge to answer questions and summarize insights from dense medical texts. It achieves state-of-the-art results in medical competency and is the first large language model to perform at an “expert” level on U.S. Medical Licensing Exam-style questions. We’re now adding multimodal capabilities to synthesize information like x-rays and mammograms to improve patient outcomes in the future. Later this summer, Med-PaLM 2 will be available to a small group of Cloud customers for feedback to identify safe and helpful use cases.

- Sec-PaLM is a specialized version of PaLM 2 that has been trained to handle security use cases. It represents a significant advancement in cybersecurity analysis. Available through Google Cloud, Sec-PaLM uses AI to analyze and explain the behavior of potentially malicious scripts. This allows it to quickly and accurately detect which scripts pose a threat to individuals and organizations.

- Duet AI for Google Cloud, a generative AI collaborator designed to help users learn, build and operate faster than ever before, is powered by PaLM 2.

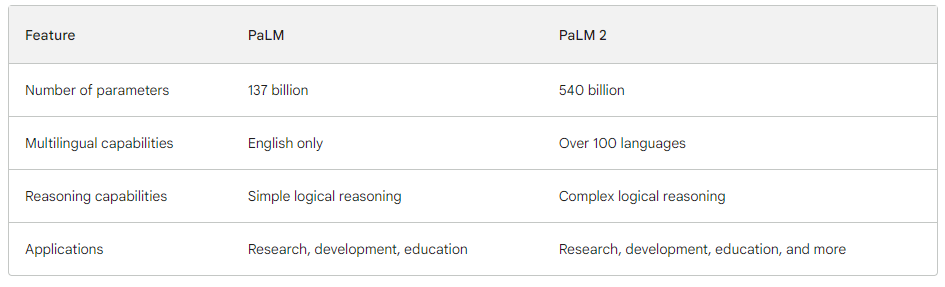

Compare PaLM and PaLM 2

PaLM (Pathways Language Model) and PaLM 2 are both large language models from Google AI. They are both trained on a massive dataset of text and code, and they can both generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

However, there are some key differences between PaLM and PaLM 2. PaLM 2 is larger and more powerful than PaLM. It has 540 billion parameters, while PaLM has 137 billion parameters. This means that PaLM 2 can learn more complex patterns and relationships in the data, and it can generate more accurate and creative outputs.

PaLM 2 is also better at multilingual tasks. It was trained on a dataset of text and code from over 100 languages, while PaLM was only trained on a dataset of text and code from English. This means that PaLM 2 can understand, generate, and translate text in more languages than PaLM.

Finally, PaLM 2 is better at reasoning. It can answer questions that require complex logical reasoning, while PaLM can only answer questions that require simple logical reasoning.

Overall, PaLM 2 is a significant improvement over PaLM. It is larger, more powerful, and better at multilingual tasks and reasoning. This makes it a valuable tool for a wide range of applications, including research, development, and education.

Compare GPT-4 and PaLM 2

Google’s PaLM 2 research project aims to create a language model that can learn from large-scale, multi-lingual datasets using deep learning and natural language processing (NLP). This model is expected to excel at tasks such as machine translation, question answering, and text summarization.

On the other hand, OpenAI’s GPT-4 is an open-source NLP model designed to generate human-like text. It is trained on a massive dataset of 45TB of text and can generate human-like text on topics it has not been trained on.

While PaLM 2 is more efficient and accurate for tasks such as natural language understanding, text classification, and summarization, GPT-4 excels at language modeling and generation. PaLM 2 is a newer model than GPT-4 and has been trained on a larger dataset of text and code, giving it the potential to be more powerful and versatile than GPT-4.

PaLM 2 outperforms GPT-4 on reasoning some mathematical and reasoning tasks.

Conclusion

PaLM 2 is Google’s next generation large language model that is good at math, code, advanced reasoning and multilingual tasks. It was trained on scientific and mathematical data and can translate spoken word languages as well as programming languages. PaLM 2 is also good at generating and understanding nuanced language like idioms and riddles. It is smaller than previous large language models but performs better overall and is more efficient to serve. Google is committed to releasing only safe and ethical tools to the public and has trained PaLM 2 to de-escalate aggressive and toxic prompting.